综述

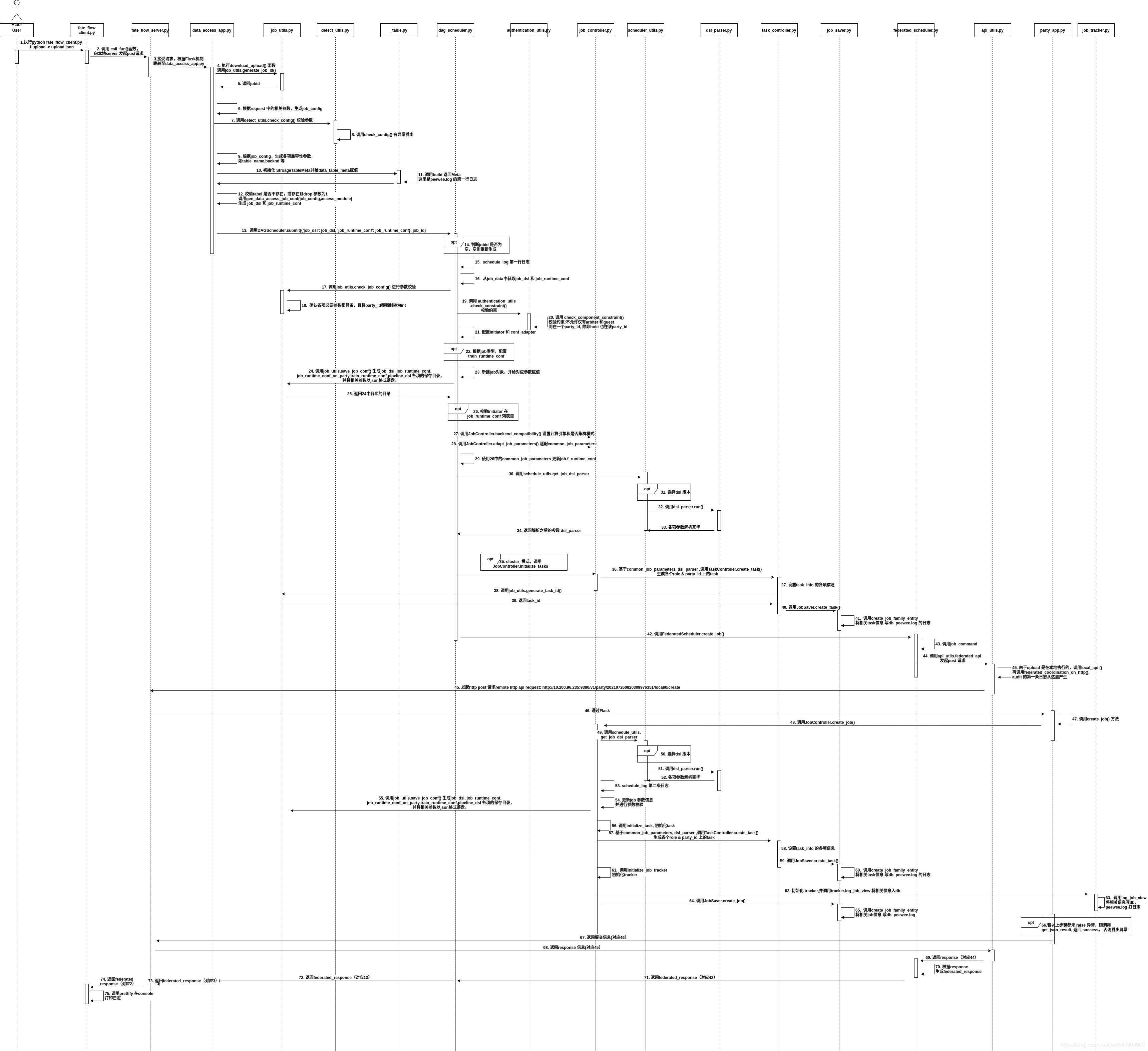

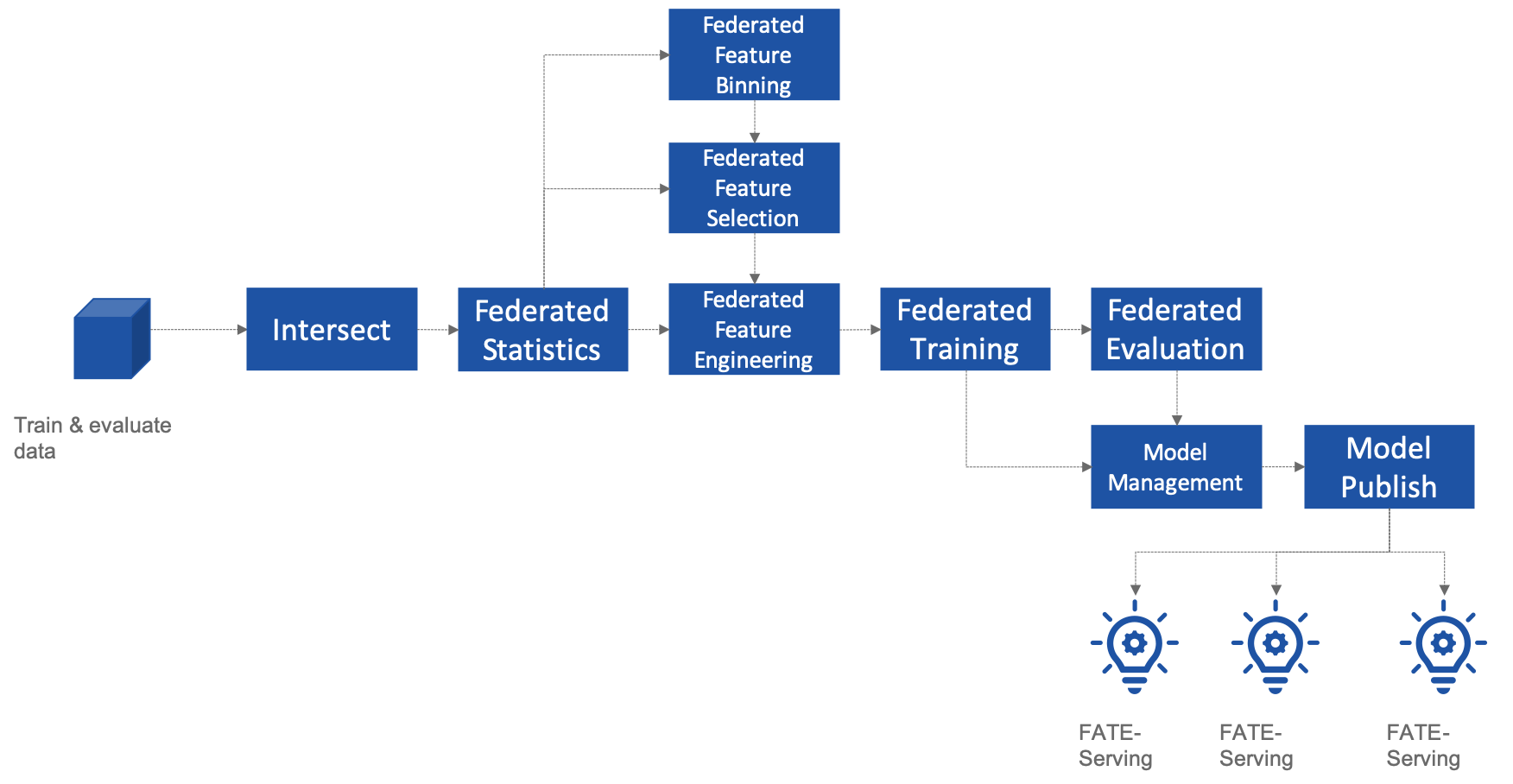

参考FATE 的flowchart,常规任务开始的第一个组件,就是dataio。

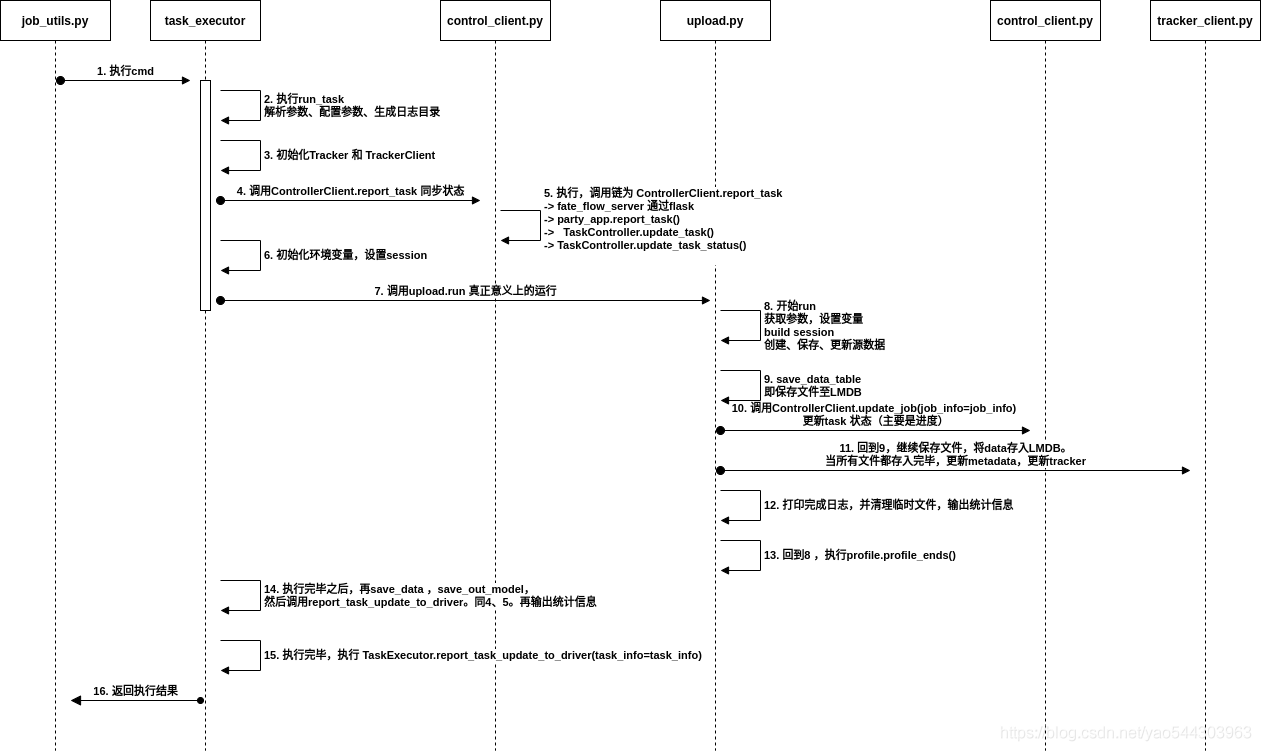

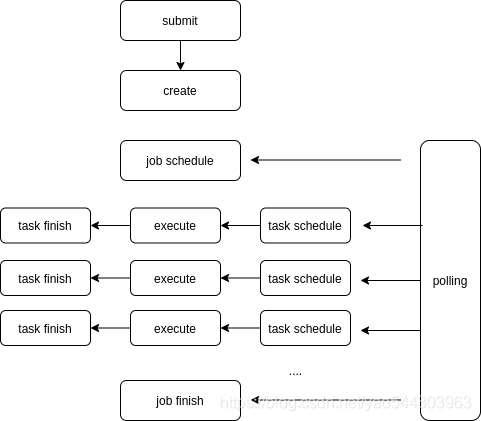

同前文,在${job_log_dir}/fate_flow_schedule.log 中输出如下命令后,开始通过task_executor 执行dataio。

1 | [INFO] [2021-06-04 14:42:02,995] [1:140456603948800] - job_utils.py[line:310]: start process command: /opt/app-root/bin/python /data/projects/fate/python/fate_flow/operation/task_executor.py -j 202106041441554669404 -n dataio_0 -t 202106041441554669404_dataio_0 -v 0 -r guest -p 9989 -c /data/projects/fate/jobs/202106041441554669404/guest/9989/dataio_0/202106041441554669404_dataio_0/0/task_parameters.json --run_ip 10.200.224.59 --job_server 10.200.224.59:9380 |

具体的执行日志,会输出在dataio_0/DEBUG.log中。